问题描述

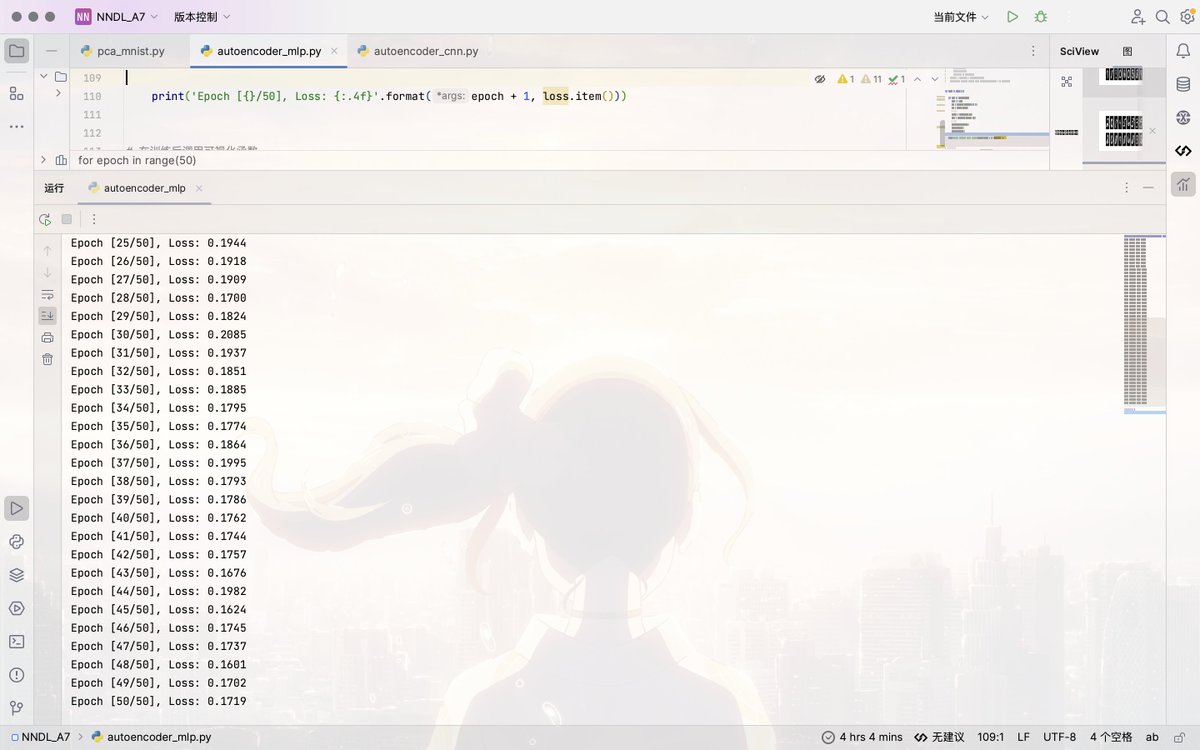

发现训练出来的loss忽大忽小的

问题所在

每个epoch计算得出的loss是最后一个batch的loss,而不是整个epoch的平均loss

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| for epoch in range(50):

for data in train_loader:

img, _ = data

img = img.view(img.size(0), -1)

img = img.to(device)

output = autoencoder(img)

loss = criterion(output, img)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print('Epoch [{}/50], Loss: {:.4f}'.format(epoch + 1, loss.item()))

|

解决方法

将每个epoch中的所有batch的loss求和,然后求平均即可:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| for epoch in range(50):

total_loss=0

for data in train_loader:

img, _ = data

img = img.view(img.size(0), -1)

img = img.to(device)

output = autoencoder(img)

loss = criterion(output, img)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

avg_loss = total_loss / len(train_loader)

print('Epoch [{}/50], Average Loss: {:.4f}'.format(epoch + 1, avg_loss))

|